Recent searches

Search options

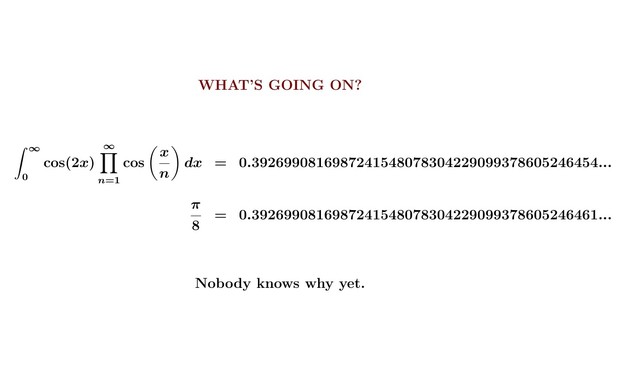

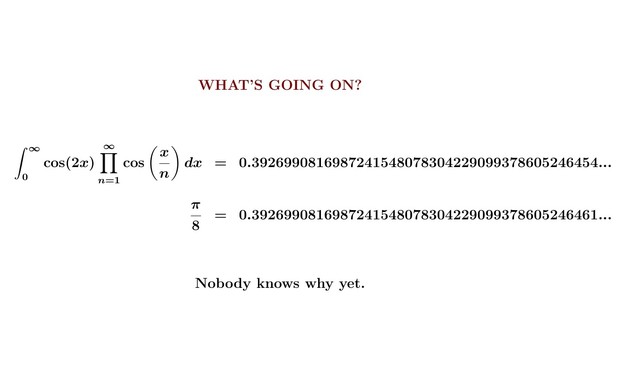

@robinhouston brought this amazing fact to our attention.

It's been studied by some very smart people, like Borwein and Bailey. It's still mysterious. But we're trying to figure it out here:

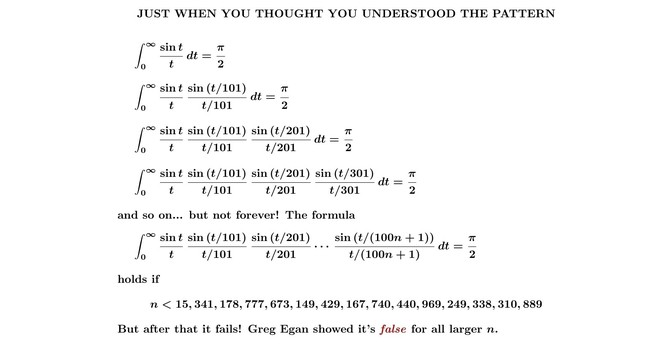

@robinhouston - an amusing fact: in 1995, in a paper on the arXiv, Kent Morrison said "we might conjecture" that

Both these are false, of course! I read about this here:

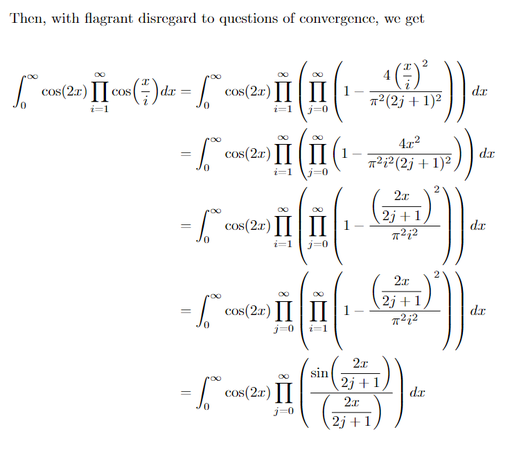

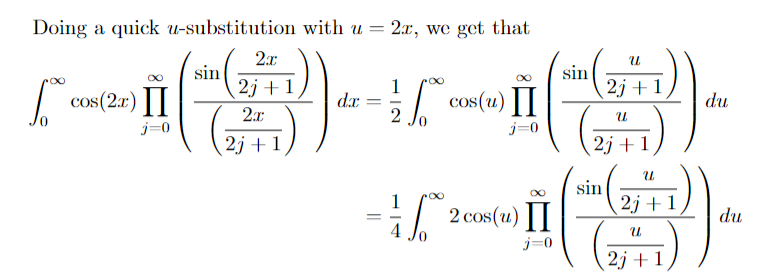

@johncarlosbaez @robinhouston I think I might have something! I played a bit fast and loose with potential convergence issues, but the vibes seem right. https://www.overleaf.com/read/frqszhcjdckk

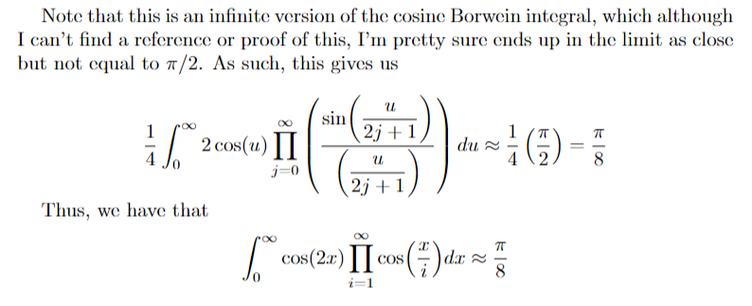

@seano @robinhouston @JadeMasterMath - cool! What do you mean by "an infinite version of the cosine Borwein integral... which ends up in the limit close but not equal to π/2"?

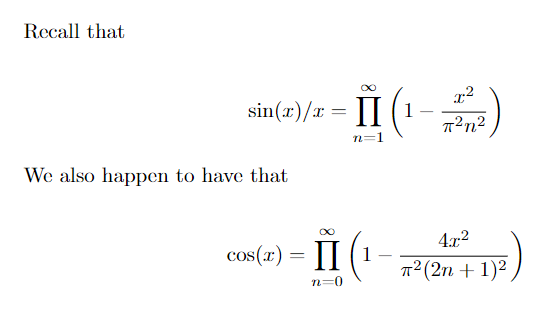

You're reminding me of how

while

but this is not close enough to explain the mystery we're talking about now, and you seem to be hinting at something else.

See https://mathworld.wolfram.com/InfiniteCosineProductIntegral.html

@johncarlosbaez @robinhouston @JadeMasterMath Yeah so by "an infinite version of the cosine Borwein integral" I'm referring to the limit of the Borwein integrals (or at least the limit of their integrands, there may be some convergence issues at play).

In this case in particular, its the integral of 2cos(𝑥) times the product of sin(𝑥/𝑛)/(𝑥/𝑛) for all odd n. My claim relies on this limit actually equaling a value close to π/2, which I don't have a proof for yet, but it seems like it should work.

@johncarlosbaez @robinhouston @JadeMasterMath And this strategy should also apply to that case! The same essential product-swapping argument should allow you to conclude that integral is the "infinite version of the Borwein integral", the integral of just the product sin(𝑥/𝑛)/(𝑥/𝑛) for all odd n. So if the limit of this also equals something close to π/2, this explains that case as well.

@johncarlosbaez @robinhouston @JadeMasterMath Indeed, the fact that this second integral has a much larger difference from π/4 than the first integral from π/8 probably comes from the fact that the normal Borwein integral differs from π/2 much more quickly than the cosine Borwein integral. So presumably the limit of the normal integrals will be much farther from π/2 than the limit of the cosine integrals. And specifically these limits should allow us to calculate the observed differences we see!

@seano @robinhouston @JadeMasterMath - okay, I was confused because the most famous Borwein integrals are

and these equal

I don't know what they do as

(1/n)

@seano @robinhouston @JadeMasterMath - so by "cosine Borwein integral" I guess you mean something like

This equals

https://en.wikipedia.org/wiki/Borwein_integral

This seems extremely related to what you've done, but again I don't know what happens as

(2/n, n = 2)

@johncarlosbaez @robinhouston @JadeMasterMath Yep exactly! I played around with the limit of the normal Borwein integrals on wolframalpha and it appears to converge to a value close to pi/2, but I don’t know if any ways to prove this or the related conjecture for the limit of the cosine Borwein integrals.

But we could support this conjecture numerically by approximating these limits and comparing them to the integrals. I haven’t done this to much precision, but so far it does seem to work!

@seano @robinhouston @JadeMasterMath - neat! There's a formula for the normal Borwein integrals in the section "General formula" here:

https://en.wikipedia.org/wiki/Borwein_integral#General_formula

It's not really a closed form, but it might help us show

is just slightly less than π/2. And there may be a similar formula for the limit we actually care about now.

@gregeganSF - since you're a whiz on Mathematica, maybe you could numerically investigate

for large

But if it stays very close to

@johncarlosbaez I’m not @gregeganSF, and I’m no whiz on Mathematica, but I can say that evaluating

NIntegrate[

2 Cos[x] Product[Sinc[x/i], {i, 1, 100001, 2}], {x, 0, Infinity},

WorkingPrecision -> 50]

gives a value that matches π/2 to 41 decimal places

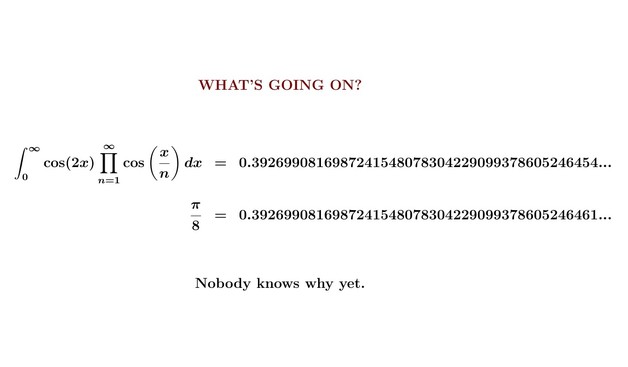

@robinhouston @gregeganSF - wow! So, if @seano's calculations here are correct - and I haven't found a mistake yet - this "explains" the mystery below.

"Explains" in quotes because all it does is reduce this mystery to the question of why

is so close to

@johncarlosbaez @gregeganSF @seano I also wonder if @gjm’s mini-thread at https://mathstodon.xyz/@gjm/109627311459780458 might explain it a level more deeply, though i’m not sufficiently facile with Fourier transforms to have understood it properly yet.

@johncarlosbaez @robinhouston @seano My gut feeling would have been that the repeated convolutions eventually eroded the original rectangular pulse down to zero, but apparently that’s not what happens. Maybe pondering those windowed averages carefully can put a lower bound on the infinite limit.

@gregeganSF - yes, I think that studying those repeated convolutions can put a lower bound on the infinite limit and eventually explain what's going on here.

This may require more of a taste for analysis than any of us have. But I feel the puzzle has now switched from a mystery to something that just requires some careful work.

@johncarlosbaez @gregeganSF @robinhouston I decided to take a look at the original paper by the Borweins to see if they had any insights, and I found out that apparently this might not be an open problem after all? On page 15 and 16 of https://carma.edu.au/resources/db90/pdfs/db90-119.00.pdf, they derive the identity in essentially the same way I did, and then even show how to obtain lower bounds on the limit (although I don’t fully understand that part). So that’s a fun turn of events! :p

@seano - Nice! I was reading some similar things in other papers by them, but not quite this.

So yes, they knew the tricks you discovered. On page 16 of the pdf file you cite, they say that "lengthy numerical computation" shows that a quantity we're interested in is less than 10⁻⁴¹. Knowing this solves the mystery, in some sense. @robinhouston showed essentially the same thing by running a short Mathematica program.

But I still think a good analyst could find a more conceptual proof!

@gregeganSF @johncarlosbaez @seano This is interesting! I think the Central Limit Theorem might be relevant here – won't it tend to a normal curve whose variance is the sum of the variances of the components? And since the series 1² + (1/3)² + (1/5)² + ⋯ converges, so will the repeatedly-convolved curve

@robinhouston @johncarlosbaez @seano

That seems to make sense, but either there must be a flaw in the argument, or I’m calculating something incorrectly.

The rectangular pulses we convolve have half-widths a_i=1/(2i-1), which gives variances a_i^2/3, and the sum of these is π^2/24.

I get the value of the Gaussian with that variance, evaluated at x=1, being 0.184428, as compared to 0.25 for the iterations that give π/2 for the integral. So that’s waaay too low!

@robinhouston @gregeganSF @johncarlosbaez @seano The CLT is about _identically distributed_ random variables.

If you convolve together a bunch of rectangles of different widths, you don't necessarily get a normal distribution. You _do_ get something whose variance is the sum of the individual variances, but it needn't be normal.

There are some theorems saying when that does and doesn't happen. I _think_ (with only Wikipedia-level knowledge) the Lindeberg criterion shows no normal limit here.

@johncarlosbaez @gregeganSF @seano @gjm Oh, I thought it satisfied Lyapunov's condition? I'll check that.

@robinhouston @johncarlosbaez @gregeganSF @seano I don't _think_ it does, but I make a lot of mistakes and am not an expert on this stuff, so I could very well be wrong.

@seano @johncarlosbaez @gregeganSF @gjm Very much same! (This is why I don't like analysis very much: too many technical side-conditions for my taste.) Let's check.

@robinhouston @seano @johncarlosbaez @gregeganSF @gjm Yeah I don’t think the CLT applies here, but the Kolmogorov three series theorem applies and shows that it is almost surely convergent to a well defined random variable, which is why the Fourier transform shouldn’t be expected to decay to zero.

@seano @R4_Unit @gjm @johncarlosbaez @gregeganSF Ah, thank you! That theorem is new to me. So (if I've understood) it converges provided that sum of the squares of the pulse-widths does, but not necessarily to a Gaussian.

@robinhouston @seano @gjm @johncarlosbaez @gregeganSF in this case yes. The first series relates to tail behavior (trivial for us), the second to means after discarding tails (all zero for us), the third to the variance (finite). This provides an if and only if condition for the almost sure convergence of a random series.

@robinhouston @seano @gjm @johncarlosbaez @gregeganSF you can also use a Berry-Essen estimate to bound the distance from a Gaussian (https://en.wikipedia.org/wiki/Berry%E2%80%93Esseen_theorem then scroll to “non-identical summands)

@robinhouston - "(This is why I don't like analysis very much: too many technical side-conditions for my taste.)"

And yet you were the one who got us into studying this crazy integral!

So apparently you like analysis enough to get us into trouble, but not enough to get us back out.

@johncarlosbaez that's about right, yes! I like the questions, but the answers sometimes make me feel confused and sad

@johncarlosbaez @robinhouston @gregeganSF @seano

Feels very much like https://www.youtube.com/watch?v=851U557j6HE is the right direction

@jamestwebber - yes, Greg Egan and I have already thought about the stuff in that video - and now Sean O has done some calculations that show exactly how that old stuff is connected to the current puzzle! So I think the mystery is dissipating.

Here's the sort of thing Greg knows how to prove:

@johncarlosbaez I guess I'm unclear on what the specific mystery is for this example. The question of "why does it do that" seems broadly answered, but maybe you have a more specific question you're trying to solve

When I posted my question, I didn't know why the integral I wrote down is about

π/8 - 7.4×10⁻⁴³

That was the specific mystery. It was by no means "broadly answered" in my mind - it was very mysterious.

However, in response to my post, Sean O has done a calculation which makes this fact much easier to understand:

https://mathstodon.xyz/@seano/109627356107653590

So now the solution is in sight, and I can easily imagine mathematicians filling in the remaining details.

I don't know if you watched the whole thing, but I think the second half of 3blue1brown's video is really useful here for illustrating what's happening. And as Sean O posted, I think this is probably shown (in a general way) in the original paper: https://mathstodon.xyz/@seano/109628832603525145

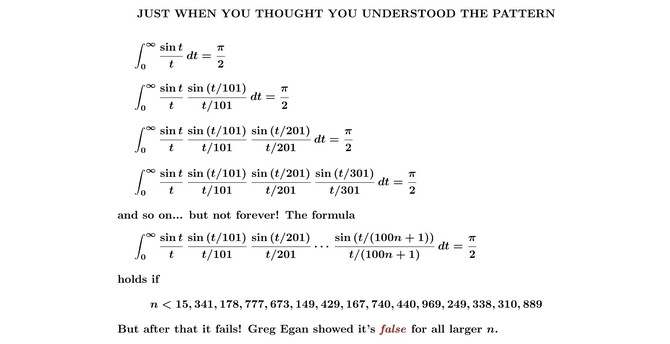

@jamestwebber - happy new year! Yes, these "patterns that eventually fail" are quite shocking at first. Some people find them discouraging, but once I understood a bit about why they happen, I started liking them.

What is the point here? That the integral isn't π/8, but matches π/8 to several times more decimal digits of precision than are used in most scientific and engineering calculations?

@Kazinator - so far, all the coincidences I've seen that are "this good' have interesting mathematical explanations.

For example

exp(π√163) = 262537412640768743.99999999999925...

leads to a world of deep insights.

According to:

https://en.wikipedia.org/wiki/Borwein_integral

There are some good explanations (that even I can sort of follow).

The repeated convolution which eventually whittles away the plateau of a square pulse after which it loses height is very easy to understand; it explains why the integrals in this family go to a certain value under certain conditions after which the pattern breaks.

@AndresCaicedo - I don't go around saying that to everyone. But Robin and I have been talking about the failure of these identities for days now. There are papers about them, and whole web page about them:

https://mathworld.wolfram.com/InfiniteCosineProductIntegral.html